Imagine having your own virtual assistant, kind of like J.A.R.V.I.S from the Iron Man movie, but personalized for your needs. This AI assistant is designed to help you tackle routine tasks or anything else you teach it to handle.

In this article, we’ll show you an example of what our trained AI assistant can achieve. We’re going to create an AI that can provide basic insights into our site’s content, assisting us in managing both the site and its content more effectively.

To build this, we’ll use three main stacks: OpenAI, LangChain, and Next.js.

OpenAI

OpenAI, if you don’t already know, is an AI research organization known for their ChatGPT, which can generate human-like responses. They also provide an API that allows developers to access these AI capabilities to build their own applications.

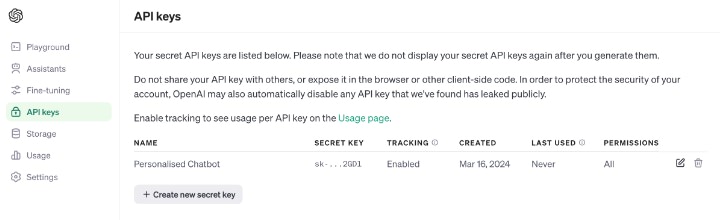

To get your API key, you can sign up on the OpenAI Platform. After signing up, you can create a key from the API keys section of your dashboard.

Once you’ve generated an API key, you have to put it in your computer as an environment variable and name it OPENAI_API_KEY. This is a standard name that libraries like OpenAI and LangChain look for, so you don’t need to pass it manually later on.

Do note that Windows, macOS, and Linux each have their own way to set an environment variable.

Windows

- Right-click on “This PC” or “My Computer” and select “Properties“.

- Click on “Advanced system settings” on the left sidebar.

- In the System Properties window, click on the “Environment Variables” button.

- Under “System variables” or “User variables“, click “New” and enter the name,

OPENAI_API_KEY, and value of the environment variable.

macOS and Linux

To set a permanent variable, add the following to your shell configuration file such as ~/.bash_profile, ~/.bashrc, ~/.zshrc.

export OPENAI_API_KEY=value

LangChain

LangChain is a system that helps computers understand and work with human language. In our case, it provides tools that will help us convert text documents into numbers.

You might wonder, why do we need to do this?

Basically, AI, machines, or computers are good at working with numbers but not with words, sentences, and their meanings. So we need to convert words into numbers.

This process is called embedding.

It makes it easier for computers to analyze and find patterns in language data, as well as helps to understand the semantics of the information they are given from a human language.

For example, let’s say a user sends a query about “fancy cars“. Rather than trying to find the exact words from the information source, it would probably understand that you are trying to search for Ferrari, Maserati, Aston Martin, Mercedes Benz, etc.

Next.js

We need a framework to create a user interface so users can interact with our chatbot.

In our case, Next.js has everything we need to get our chatbot up and running for the end-users. We will build the interface using a React.js UI library, shadcn/ui. It has a route system for creating an API endpoint.

It also provides an SDK that will make it easier and quicker to build chat user interfaces.

Data and Other Prerequisites

Ideally, we’ll also need to prepare some data ready. These will be processed, stored in a Vector storage and sent to OpenAI to give more info for the prompt.

In this example, to make it simpler, I’ve made a JSON file with a list of title of a blog post. You can find them in the repository. Ideally, you’d want to retrieve this information directly from the database.

I assume you have a fair understanding of working with JavaScript, React.js, and NPM because we’ll use them to build our chatbot.

Also, make sure you have Node.js installed on your computer. You can check if it’s installed by typing:

node -v

If you don’t have Node.js installed, you can follow the instructions on the official website.

How’s Everything Going to Work?

To make it easy to understand, here’s a high-level overview of how everything is going to work:

- The user will input a question or query into the chatbot.

- LangChain will retrieve related documents of the user’s query.

- Send the prompt, the query, and the related documents to the OpenAI API to get a response.

- Display the response to the user.

Now that we have a high-level overview of how everything is going to work, let’s get started!

Installing Dependencies

Let’s start by installing the necessary packages to build the user interface for our chatbot. Type the following command:

npx create-next-app@latest ai-assistant --typescript --tailwind --eslint

This command will install and set up Next.js with shadcn/ui, TypeScript, Tailwind CSS, and ESLint. It may ask you a few questions; in this case, it’s best to select the default options.

Once the installation is complete, navigate to the project directory:

cd ai-assistant

Next, we need to install a few additional dependencies, such as ai, openai, and langchain, which were not included in the previous command.

npm i ai openai langchain @langchain/openai remark-gfm

Building the Chat Interface

To create the chat interface, we’ll use some pre-built components from shadcn/ui like the button, avatar, and input. Fortunately, adding these components is easy with shadcn/ui. Just type:

npx shadcn-ui@latest add scroll-area button avatar card input

This command will automatically pull and add the components to the ui directory.

Next, let’s make a new file named Chat.tsx in the src/components directory. This file will hold our chat interface.

We’ll use the ai package to manage tasks such as capturing user input, sending queries to the API, and receiving responses from the AI.

The OpenAI’s response can be plain text, HTML, or Markdown. To format it into proper HTML, we’ll use the remark-gfm package.

We’ll also need to display avatars within the Chat interface. For this tutorial, I’m using Avatartion to generate avatars for both the AI and the user. These avatars are stored in the public directory.

Below is the code we’ll add to this file.

'use client';

import { Avatar, AvatarFallback, AvatarImage } from '@/ui/avatar';

import { Button } from '@/ui/button';

import {

Card,

CardContent,

CardFooter,

CardHeader,

CardTitle,

} from '@/ui/card';

import { Input } from '@/ui/input';

import { ScrollArea } from '@/ui/scroll-area';

import { useChat } from 'ai/react';

import { Send } from 'lucide-react';

import { FunctionComponent, memo } from 'react';

import { ErrorBoundary } from 'react-error-boundary';

import ReactMarkdown, { Options } from 'react-markdown';

import remarkGfm from 'remark-gfm';

/**

* Memoized ReactMarkdown component.

* The component is memoized to prevent unnecessary re-renders.

*/

const MemoizedReactMarkdown: FunctionComponent<Options> = memo(

ReactMarkdown,

(prevProps, nextProps) =>

prevProps.children === nextProps.children &&

prevProps.className === nextProps.className

);

/**

* Represents a chat component that allows users to interact with a chatbot.

* The component displays a chat interface with messages exchanged between the user and the chatbot.

* Users can input their questions and receive responses from the chatbot.

*/

export const Chat = () => {

const { handleInputChange, handleSubmit, input, messages } = useChat({

api: '/api/chat',

});

return (

<Card className="w-full max-w-3xl min-h-[640px] grid gap-3 grid-rows-[max-content,1fr,max-content]">

<CardHeader className="row-span-1">

<CardTitle>AI Assistant</CardTitle>

</CardHeader>

<CardContent className="h-full row-span-2">

<ScrollArea className="h-full w-full">

{messages.map((message) => {

return (

<div

className="flex gap-3 text-slate-600 text-sm mb-4"

key={message.id}

>

{message.role === 'user' && (

<Avatar>

<AvatarFallback>U</AvatarFallback>

<AvatarImage src="/user.png" />

</Avatar>

)}

{message.role === 'assistant' && (

<Avatar>

<AvatarImage src="/kovi.png" />

</Avatar>

)}

<p className="leading-relaxed">

<span className="block font-bold text-slate-700">

{message.role === 'user' ? 'User' : 'AI'}

</span>

<ErrorBoundary

fallback={

<div className="prose prose-neutral">

{message.content}

</div>

}

>

<MemoizedReactMarkdown

className="prose prose-neutral prose-sm"

remarkPlugins={[remarkGfm]}

>

{message.content}

</MemoizedReactMarkdown>

</ErrorBoundary>

</p>

</div>

);

})}

</ScrollArea>

</CardContent>

<CardFooter className="h-max row-span-3">

<form className="w-full flex gap-2" onSubmit={handleSubmit}>

<Input

maxLength={1000}

onChange={handleInputChange}

placeholder="Your question..."

value={input}

/>

<Button aria-label="Send" type="submit">

<Send size={16} />

</Button>

</form>

</CardFooter>

</Card>

);

};

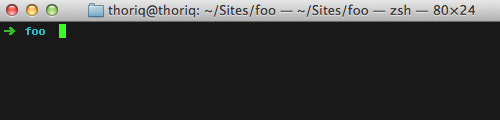

Let’s check out the UI. First, we need to enter the following command to start the Next.js localhost environment:

npm run dev

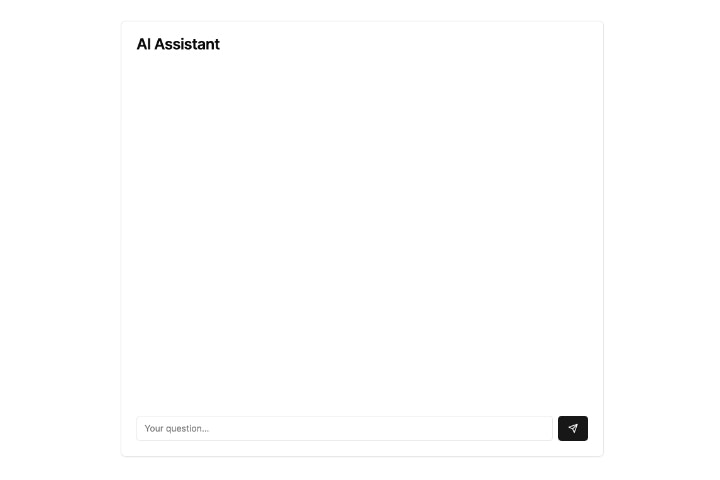

By default, the Next.js localhost environment runs at localhost:3000. Here’s how our chatbot interface will appear in the browser:

Setting up the API endpoint

Next, we need to set up the API endpoint that the UI will use when the user submits their query. To do this, we create a new file named route.ts in the src/app/api/chat directory. Below is the code that goes into the file.

import { readData } from '@/lib/data';

import { OpenAIEmbeddings } from '@langchain/openai';

import { OpenAIStream, StreamingTextResponse } from 'ai';

import { Document } from 'langchain/document';

import { MemoryVectorStore } from 'langchain/vectorstores/memory';

import OpenAI from 'openai';

/**

* Create a vector store from a list of documents using OpenAI embedding.

*/

const createStore = () => {

const data = readData();

return MemoryVectorStore.fromDocuments(

data.map((title) => {

return new Document({

pageContent: `Title: ${title}`,

});

}),

new OpenAIEmbeddings()

);

};

const openai = new OpenAI();

export async function POST(req: Request) {

const { messages } = (await req.json()) as {

messages: { content: string; role: 'assistant' | 'user' }[];

};

const store = await createStore();

const results = await store.similaritySearch(messages[0].content, 100);

const questions = messages

.filter((m) => m.role === 'user')

.map((m) => m.content);

const latestQuestion = questions[questions.length - 1] || '';

const response = await openai.chat.completions.create({

messages: [

{

content: `You're a helpful assistant. You're here to help me with my questions.`,

role: 'assistant',

},

{

content: `

Please answer the following question using the provided context.

If the context is not provided, please simply say that you're not able to answer

the question.

Question:

${latestQuestion}

Context:

${results.map((r) => r.pageContent).join('n')}

`,

role: 'user',

},

],

model: 'gpt-4',

stream: true,

temperature: 0,

});

const stream = OpenAIStream(response);

return new StreamingTextResponse(stream);

}

Let’s break down some important parts of the code to understand what’s happening, as this code is crucial for making our chatbot work.

First, the following code enables the endpoint to receive a POST request. It takes the messages argument, which is automatically constructed by the ai package running on the front-end.

export async function POST(req: Request) {

const { messages } = (await req.json()) as {

messages: { content: string; role: 'assistant' | 'user' }[];

};

}

In this section of the code, we process the JSON file, and store them in a vector store.

const createStore = () => {

const data = readData();

return MemoryVectorStore.fromDocuments(

data.map((title) => {

return new Document({

pageContent: `Title: ${title}`,

});

}),

new OpenAIEmbeddings()

);

};

For the sake of simplicity in this tutorial, we store the vector in memory. Ideally, you would need to store it in a Vector database. There are several options to choose from, such as:

Then we retrieve of the relevant piece from the document based on the user query from it.

const store = await createStore(); const results = await store.similaritySearch(messages[0].content, 100);

Finally, we send the user’s query and the related documents to the OpenAI API to get a response, and then return the response to the user. In this tutorial, we use the GPT-4 model, which is currently the latest and most powerful model in OpenAI.

const latestQuestion = questions[questions.length - 1] || '';

const response = await openai.chat.completions.create({

messages: [

{

content: `You're a helpful assistant. You're here to help me with my questions.`,

role: 'assistant',

},

{

content: `

Please answer the following question using the provided context.

If the context is not provided, please simply say that you're not able to answer

the question.

Question:

${latestQuestion}

Context:

${results.map((r) => r.pageContent).join('n')}

`,

role: 'user',

},

],

model: 'gpt-4',

stream: true,

temperature: 0,

});

We use a simple very prompt. We first tell OpenAI to evaluate the user’s query and respond to user with the provided context. We also set the latest model available in OpenAI, gpt-4 and set the temperature to 0. Our goal is to ensure that the AI only responds within the scope of the context, instead of being creative which can often lead to hallucination.

And that’s it. Now, we can try to chat with the chatbot; our virtual personal assistant.

Wrapping Up

We’ve just built a simple chatbot! There’s room to make it more advanced, certainly. As mentioned in this tutorial, if you plan to use it in production, you should store your vector data in a proper database instead of in memory. You might also want to add more data to provide better context for answering user queries. You may also try tweaking the prompt to improve the AI’s response.

Overall, I hope this helps you get started with building your next AI-powered application.

The post How to Create a Personalized AI Assistant with OpenAI appeared first on Hongkiat.